MattGPT

I am able to run a local GPT platform based on this software. I have also added it to my SSO and I think that's pretty neat. This means if you want access, you can ask me for it.

Here's my Docker Compose.

docker-compose.yaml

services:

ollama:

image: ghcr.io/open-webui/open-webui:ollama

container_name: mattgpt

volumes:

- ollama:/root/.ollama

- open-webui:/app/backend/data

environment:

WEBUI_URL: 'https://gpt.domain.com'

ENABLE_OAUTH_SIGNUP: 'true'

OAUTH_MERGE_ACCOUNTS_BY_EMAIL: 'true'

OAUTH_CLIENT_ID: 'gpt-oidc'

OAUTH_CLIENT_SECRET: 'makeupanicelongstringofletters'

OPENID_PROVIDER_URL: 'https://auth.domain.com/.well-known/openid-configuration'

OAUTH_PROVIDER_NAME: 'Authelia'

OAUTH_SCOPES: 'openid email profile groups'

ENABLE_OAUTH_ROLE_MANAGEMENT: 'true'

OAUTH_ALLOWED_ROLES: 'gpt-access,gpt-admin'

OAUTH_ADMIN_ROLES: 'gpt-admin'

OAUTH_ROLES_CLAIM: 'groups'

ports:

- 8080:8080

restart: always

network_mode: bridge

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 2

capabilities: [gpu]

volumes:

ollama:

driver: local # Define the driver and options under the volume name

driver_opts:

type: none

device: ./ollama

o: bind

open-webui:

driver: local # Define the driver and options under the volume name

driver_opts:

type: none

device: ./open-webui

o: bind

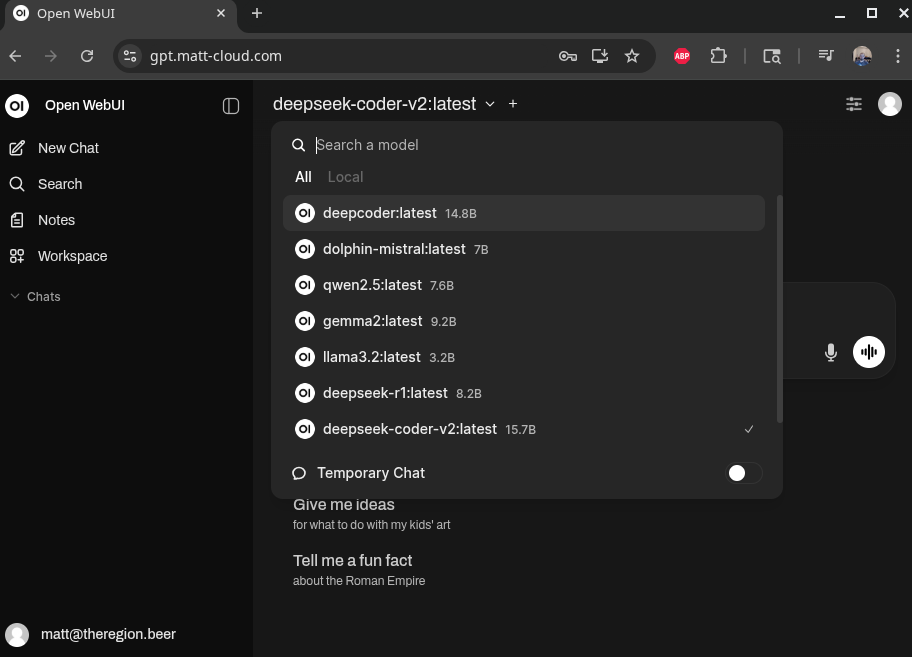

Sample Screenshot.